Once you have web performance debugging data flowing into Analytics, as for example via the site-performance-tracker plug-in, push the data to BigQuery for querying.

Default query can be the following one, yielding 2 tables with recent CLS and INP information:

/*

project_id: project-crux

analytics_id: analytics_000000000

# When copying, update the project_id with the value in project= (check the URL)

# Replace the analytics_id with the name of the table created by the BigQuery link.

*/

CREATE SCHEMA IF NOT EXISTS `project-crux.crux_data`;

CREATE OR REPLACE TABLE `project-crux.crux_data.web_vitals_recent_cls`

PARTITION BY DATE(event_timestamp)

CLUSTER BY metric_name

AS

SELECT

ga_session_id,

evt.* EXCEPT (event_name),

event_name AS metric_name,

FORMAT_TIMESTAMP('%Y%m%d', event_timestamp) AS event_date

FROM

(

SELECT

ga_session_id,

ARRAY_AGG(custom_event) AS events

FROM

(

SELECT

ga_session_id,

STRUCT(

country,

device_category,

device_os,

traffic_source,

page_path,

debug_target,

event_timestamp,

event_name,

metric_value) AS custom_event

FROM

(

SELECT

(SELECT value.int_value FROM UNNEST(event_params) WHERE key = 'ga_session_id')

AS ga_session_id,

ANY_VALUE(device.category) AS device_category,

ANY_VALUE(device.operating_system) AS device_os,

ANY_VALUE(traffic_source.source) AS traffic_source,

ANY_VALUE(

REGEXP_SUBSTR(

(SELECT value.string_value FROM UNNEST(event_params) WHERE key = 'page_location'),

r'^[^?]+')) AS page_path,

ANY_VALUE(

(SELECT value.string_value FROM UNNEST(event_params) WHERE key = 'debug_target'))

AS debug_target,

ANY_VALUE(geo.country) AS country,

ANY_VALUE(event_name) AS event_name,

TIMESTAMP_MICROS(MAX(event_timestamp)) AS event_timestamp,

MAX(

(

SELECT COALESCE(value.double_value, value.int_value)

FROM UNNEST(event_params)

WHERE key = 'metric_value'

)) AS metric_value

FROM

(SELECT * FROM `project-crux.analytics_000000000.events_*`)

WHERE

event_name = 'CLS'

AND TIMESTAMP_MICROS(event_timestamp) >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 7 DAY)

GROUP BY

1

)

)

WHERE

ga_session_id IS NOT NULL

GROUP BY ga_session_id

)

CROSS JOIN UNNEST(events) AS evt;

CREATE OR REPLACE TABLE `project-crux.crux_data.web_vitals_recent_inp`

PARTITION BY DATE(event_timestamp)

CLUSTER BY metric_name

AS

SELECT

ga_session_id,

evt.* EXCEPT (event_name),

event_name AS metric_name,

FORMAT_TIMESTAMP('%Y%m%d', event_timestamp) AS event_date

FROM

(

SELECT

ga_session_id,

ARRAY_AGG(custom_event) AS events

FROM

(

SELECT

ga_session_id,

STRUCT(

country,

device_category,

device_os,

traffic_source,

page_path,

debug_target,

event_timestamp,

event_name,

metric_value) AS custom_event

FROM

(

SELECT

(SELECT value.int_value FROM UNNEST(event_params) WHERE key = 'ga_session_id')

AS ga_session_id,

ANY_VALUE(device.category) AS device_category,

ANY_VALUE(device.operating_system) AS device_os,

ANY_VALUE(traffic_source.source) AS traffic_source,

ANY_VALUE(

REGEXP_SUBSTR(

(SELECT value.string_value FROM UNNEST(event_params) WHERE key = 'page_location'),

r'^[^?]+')) AS page_path,

ANY_VALUE(

(SELECT value.string_value FROM UNNEST(event_params) WHERE key = 'debug_target'))

AS debug_target,

ANY_VALUE(geo.country) AS country,

ANY_VALUE(event_name) AS event_name,

TIMESTAMP_MICROS(MAX(event_timestamp)) AS event_timestamp,

MAX(

(

SELECT COALESCE(value.double_value, value.int_value)

FROM UNNEST(event_params)

WHERE key = 'metric_value'

)) AS metric_value

FROM

(SELECT * FROM `project-crux.analytics_000000000.events_*`)

WHERE

event_name = 'INP'

AND TIMESTAMP_MICROS(event_timestamp) >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 7 DAY)

GROUP BY

1

)

)

WHERE

ga_session_id IS NOT NULL

GROUP BY ga_session_id

)

CROSS JOIN UNNEST(events) AS evt;Partitioned tables don’t support sorting, but you can sort queries against these tables, like so, for example while also filtering to notable CLS events (for example over 0.03):

/*

project_id: project-crux

*/

# When copying, update the project_id with the value in project= (check the URL)

SELECT *

FROM `project-crux.crux_data.web_vitals_recent_cls`

WHERE metric_value >= 0.03

ORDER BY metric_value DESC;You’ll be able to download a CSV condensed with key data, which can later process.

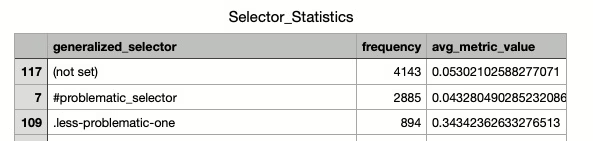

One approach which can yield a good result is to look for selectors with a high frequency.